- More and more companies use recruitment algorithms

- What types of algorithms are used in recruitment?

- Source, screen, match, interview, assess, and more

- The issue of bias remains, also in algorithmic recruitment

- How can we ensure recruitment algorithms actually promote equity?

Mentioning AI in combination with human resources and recruitment can appear somewhat of a contradiction, but many HR managers believe that AI can play a significant role in the improvement of the recruitment process, enabling people to focus more on people and less on tasks. Reasons for using AI-based solutions in HR include time and cost savings, more accurate decision making, and improved employee experience. And while algorithms have the potential to change our lives for the better, we also have to consider that there is the real risk of AI exacerbating issues of inequality, which often has a significant impact on the most marginalised and vulnerable people in our society. So, is the use of algorithms in recruitment actually a good idea?

More and more companies use recruitment algorithms

While we tend to focus on the fact that algorithms impact society, we need to consider that the opposite is true as well: our society and the changes that happen globally also influence the scale at which we use algorithms and how they are applied, particularly in recruitment. One example is the pandemic, which has led to widespread job losses and people applying for limited vacancies. In order to lessen the burden on recruitment and HR departments, more and more companies are deploying recruitment algorithms to make their hiring decisions more efficient.

If you consider the fact that a recruiter currently spends approximately 34 seconds reading a CV, which is 21 per cent less time in comparison to 2017, we can only imagine what an algorithm could do in those 34 seconds. The pandemic has also forced many companies to screen job applicants remotely, instead of interviewing candidates in person, making use of tools to analyse and rate CVs and help recruiters assess skill sets, personality traits, cognitive abilities, emotional intelligence, and more.

What types of algorithms are used in recruitment?

Recruitment and hiring platforms like LinkedIn, Indeed, and Monster are increasingly using algorithms to determine who sees which job postings and to assist recruiters in finding the right candidate. These algorithms are fed with data about similar or previous applicants and can significantly minimise the time and effort needed to invest in making the right hire. Recruiters use AI throughout the recruitment process – from advertising and attracting potential applicants, to predicting a prospective candidate’s performance on the job.

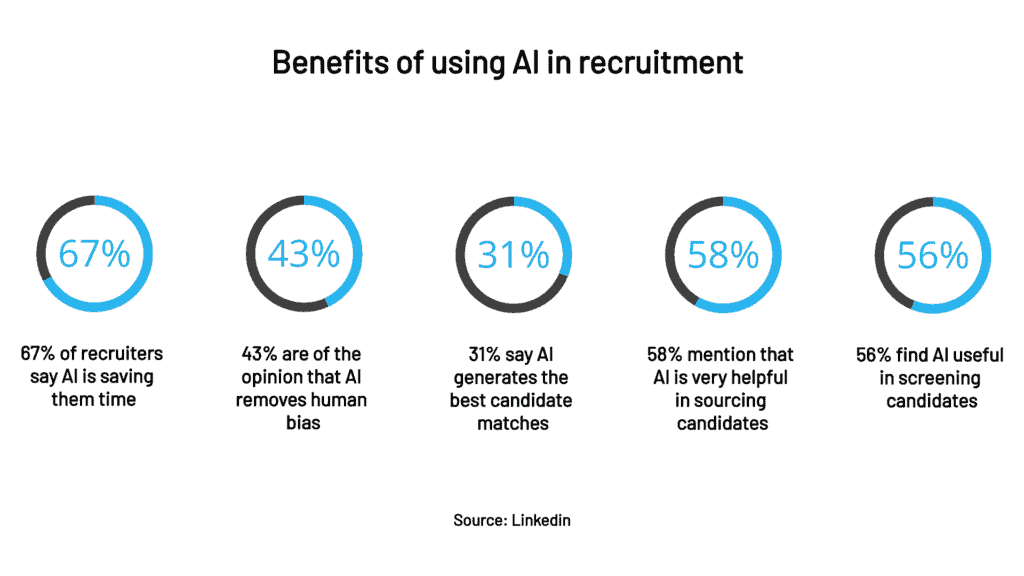

According to Aaron Rieke from digital technology research group Upturn: “Just like with the rest of the world’s digital advertisements, AI is helping target who sees what job descriptions and who sees what job marketing.” According to a report from LinkedIn on how diversity, new interview methods, data, and AI impact recruitment, 67 per cent of hiring managers and recruiters worldwide said AI was saving them time, 43 per cent said it removes human bias, and 31 per cent said it generates the best candidate matches. Some 58 per cent of respondents mentioned that AI is very helpful in sourcing candidates, and 56 per cent found AI useful in screening candidates.

Source, screen, match, interview, assess, and more

Hiring algorithms are designed to help recruiters spend less time reading job applications and CVs that eventually end up not matching the job requirements. Instead of having to manually search through CVs to see which candidates have the right qualifications and spend time interviewing and assessing them, recruiters can now use various algorithms to do (a huge part of) the job.

Sourcing algorithms

Sourcing algorithms are developed to make it easier to get through the most time-consuming part of the recruitment process: searching multiple sources for job candidates. The company lists the skills it’s looking for and then the software scans thousands of CVs and profiles on platforms like LinkedIn. The details of the candidates who most closely match the criteria are sent to the HR department so that a human recruiter can put them on the shortlist and contact them. These sourcing algorithms enable recruiters to not only reach out to more potential candidates, but they also help locate ‘passive candidates’ who are not visible on the most popular professional networks or don’t update their outdated profiles.

The AmazingHiring sourcing tool, for instance, is fully automated and can single out a candidate’s main skills and current professional competencies. The AI technology will create a list of the most relevant profiles for the role that needs to be filled. All the recruiter needs to do is choose the core skill or specialisation from the list and the AmazingHiring AI takes care of everything else. The AI analyses potential candidates’ profiles from various platforms, including LinkedIn, StackOverflow, GitHub, Reddit, and many more, including uncommon platforms like Telegram.

Filtering or screening algorithms

Once a number of CVs have been collected, a filtering algorithm will analyse the information and dive deeper into the analysis of the candidate. Based on various defined behavioural criteria, some of these filtering algorithms can even analyse the degree of compatibility between a job candidate and a future employer.

According to Somen Mondal, CEO and co-founder of screening and matching platform Ideal, which is used to screen 5 million candidates a month, these systems can learn to understand and compare experiences across candidates’ CVs, and then rank them based on how closely they match a job opening. Mondal says: “It’s almost like a recruiter Googling a company listed on an application and learning about it.”

Matching algorithms

While sourcing and filtering algorithms are used to assist recruiters with their job, matching algorithms basically work as a search engine for job seekers. Applicants upload their CV, which is then analysed to find the jobs that are best suited to the candidate’s profile and requirements. Matching algorithms can also double up as sourcing algorithms – they can provide job seekers with job openings or send matched applicants to recruiters. This can help both parties, while offering the company that provides the matching service two separate revenue streams.

ZipRecruiter’s new algorithm is fed data from billions of employer and job seeker interactions in the ZipRecruiter marketplace. The algorithm shows job seekers a match score for each job: great match, good match, fair match, or no match, providing insight into the likelihood of being considered for a particular position. ZipRecruiter also provides recommendations on how to increase chances of success by giving applicants tips on how to improve their CV. ZipRecruiter CEO and co-founder Ian Siegel says: “Like a personal recruiter, ZipRecruiter gives job seekers information about what companies are looking for and how to make their applications more successful. Explicitly showing job seekers what our advanced matching algorithms have learned transforms the job search experience for them by cutting out the guesswork and saving them time. It’s like giving job seekers a map.”

Chatbots answering basic questions

Recruitment AI doesn’t just work behind the scenes, either. Chatbots using natural language processing technology can be used to reach out to applicants about job openings or find out basic information about them, which decreases the need for screening interviews by humans. These bots can get in touch with prospective candidates via email, text message, or through channels like WhatsApp or Facebook. Chatbots can help recruiters save time by handling up to 80 per cent of standard questions within mere minutes. This is particularly valuable if you consider that more than 50 per cent of applicants give up on a position if they haven’t heard back from a company within 14 days.

Many candidates also expect a customised and personalised response, something that chatbots – which are getting increasingly smarter – can take care of by leveraging existing data, machine learning, and natural language processing. They’re so smart, in fact, that many candidates are unable to tell that they are communicating with a chatbot about basic questions regarding their job application.

Candidate assessment algorithms

While a CV may provide a lot of information on skills and accomplishments, it doesn’t provide as much information regarding characteristics and personal qualities. Candidate assessment algorithms give recruiters additional information to help them make more informed decisions on who to call in for a personal interview. Pymetrics, for instance, is a company that sells neuroscience computer games for recruitment purposes. The company’s BCG Pymetrics tests use data science and algorithms to efficiently identify candidates with traits that match specific job requirements. The system studies millions of data points to match applicants to jobs judged to be a good fit, based on Pymetrics’ predictive algorithms.

The test, which can be taken on a smartphone, takes 25 minutes to complete and consists of various short games that assess 91 skills and traits, such as focus and attention, emotional intelligence, effort, fairness and generosity, decision making skills, risk tolerance, learning and memory, and more. Based on the candidate’s behaviour, the system will adjust the conditions of each game in order to further determine and measure their personal qualities. After the test, candidates receive a traits report, documenting the personal traits that were assessed during the mini games, and noting which particular qualities are most unique to the candidate.

Automated video job interviews

Automated video interviews consist of a set of pre-recorded questions for a job candidate to answer on video. Candidates present themselves by answering questions and showcasing their professional appearance and personality. As all candidates get the same questions, this type of interview also helps make the hiring process fairer. The interview usually starts with a welcome message by the recruiter and a camera and microphone test. The questions can either be asked via text or pre-recorded video. These screening systems often enable multiple tries as well, with the last try being the one that gets submitted. The recordings are either evaluated by a hiring manager or by AI and facial analysis software.

Leading interview technology company HireVue, founded by former Google and McKinsey employees, says its systems are used by more than 700 businesses, and more than 10 million conversations with candidates have been held via its platform. During the pandemic, a leading grocery chain in the US made its recruitment process more efficient by conducting 15,000 automated video interviews per day, according to HireVue CEO Kevin Parker. HireVue clients include Randstad, Boston Red Sox, Honeywell, Intel, FedEx, Unilever, and more.

The issue of bias remains, also in algorithmic recruitment

Many employers are also trying to find solutions for issues around bias, diversity, and discrimination when hiring new employees, and many hope that algorithms will help them avoid their own prejudices by adding fairness and consistency to the recruitment process. Recruitment and hiring platforms have taken various steps to ensure that the algorithms they use are predictable, balanced, and fair. But as discrimination and bias are fundamentally a flaw in human decision making, it only makes sense that the same bias is found in algorithmic decision making as well.

The data used to train algorithms is influenced by human decisions and often contains human prejudices. Algorithms can and do also replicate historical and institutional biases. And while algorithms do remove some subjectivity from the recruitment process, the final hiring decisions are still made by humans. Research has found that many algorithms actually lean towards bias by default and that only tools that proactively tackle deeper disparities offer any real hope of promoting fairness instead of eroding it.

A good example is the AI tool Amazon developed between 2014 and 2017 to rate job candidates. Amazon’s programmers soon realised, however, that the algorithmic system was only really good at identifying men in particular. And this is no surprise, as the system was trained on CVs, primarily men’s, that had been sent to Amazon over a period of ten years. The algorithm ultimately mirrored the hiring biases towards men that Amazon itself had shown in the past, penalising words like ‘women’ in a CV – as in a women’s club or sport – and even earmarking women’s universities as ‘less preferable’. Amazon did try to adjust the algorithm to make it less biased, but the company eventually withdrew its system altogether.

As Jordan Weissmann wrote for Slate: “All of this is a remarkably clear-cut illustration of why many tech experts are worried that, rather than remove human biases from important decisions, artificial intelligence will simply automate them. Amazon deserves some credit for realising its tool had a problem, trying to fix it, and eventually moving on. But, at a time when lots of companies are embracing artificial intelligence for things like hiring, what happened at Amazon really highlights that using such technology without unintended consequences is hard. And if a company like Amazon can’t pull it off without problems, it’s difficult to imagine that less sophisticated companies can.”

How can we ensure recruitment algorithms actually promote equity?

In our quest to promote equity when building, training, and using recruitment algorithms, regulation and industry-wide best practices play important roles. But since regulation is often slow-moving and best practices take time to be developed, tried, and tested, companies building these tools – as well as the ones using them – need to consider thinking beyond the minimum compliance requirements. They need to evaluate whether their algorithms will actually lead to more equitable hiring and how subjective measures of success might adversely influence an algorithm’s predictions over time.

Algorithmic bias is a residual problem, for which there are no easy answers. And while we may never be able to completely eradicate all human biases, the same technology could also be part of the solution. Algorithms could, for instance, be used in ‘algorithmic hygiene’: to identify specific causes of bias and employ best practices to mitigate them in the early phases of a system’s life cycle.

According to Nicol Turner Lee, Paul Resnick, and Genie Barton, in a piece written for the US research group The Brookings Institution, “companies and other operators of algorithms must be aware that there is no simple metric to measure fairness that a software engineer can apply, especially in the design of algorithms and the determination of the appropriate trade-offs between accuracy and fairness. Fairness is a human, not a mathematical, determination, grounded in shared ethical beliefs. Thus, algorithmic decisions that may have a serious consequence for people will require human involvement.”

In closing

While it will still be quite a while before artificial intelligence-based systems will be able to handle recruitment autonomously – if ever – they can save traditional recruiters a lot of time and money by helping them process large amounts of data. Algorithms can speed up the recruitment process by doing the groundwork, such as weeding out large numbers of CVs, analysing video interviews, and selecting the most suitable candidates.

Companies can also use algorithms to predict prospective candidate work performance or assess personality traits and test results. The use of these algorithms, while promising to decrease bias, has however also been known to actually introduce or amplify bias and lead to discriminatory hiring decisions. It is critical, therefore, to introduce human control systems to ensure fair and equitable recruitment practices. It will also be important to protect fairness and human rights by means of additional regulation around the use of AI. Making the right decisions now means we will be able to enjoy the many benefits AI has to offer, while simultaneously reducing the risk of unfair discrimination and bias in recruitment.

Share via: