- We’re right to have concerns about self-driving car safety

- The Uber accident offers more reasons for worry – or does it?

- Rethinking the basics – better safe than sorry

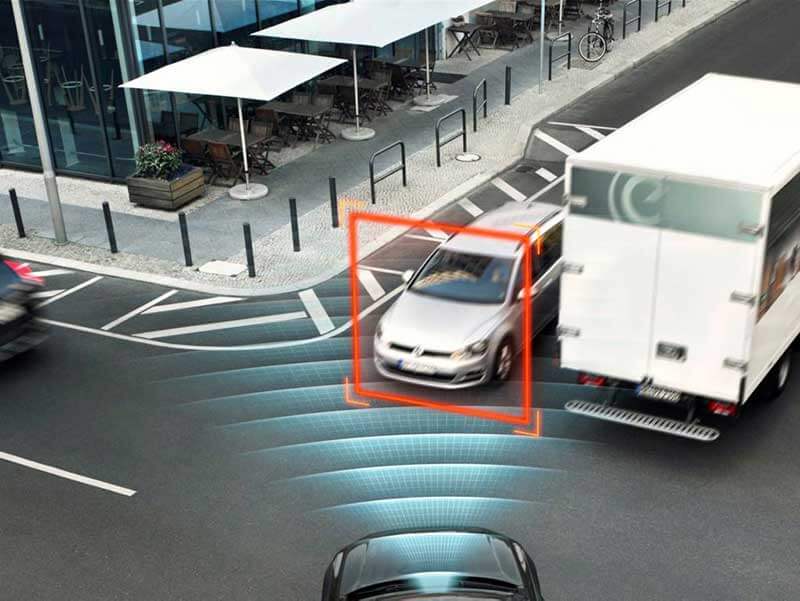

Self-driving systems promise a future free from traffic, congestion, and accidents. And it’s clear that they’re one of the most disruptive technologies in play now. We’ve written about stunning advances in the systems that make automated driving possible, and we’re betting on a bright future. But it’s also fair to say that this young tech has miles to go before it’s really road-ready. Complicating matters, too, is the question of whether society and our infrastructure are primed enough to embrace intelligent supercars.

In fact, most consumers are leery of automated driving. They’re just not ready to trust the tech. If we choose to use self-driving vehicles, it means that our safety relies solely on the car’s ability to take us to our destination in one piece. We wouldn’t be steering the wheel – the smart car would be in charge. And that makes a lot of people uneasy.

We get that, but it’s worth keeping in mind that, in the US alone, more than 37,000 people lost their lives in road accidents in 2016. The vast majority of these accidents were the result of human error. Experts who’ve looked carefully at the future of self-driving systems think this is something that we’ll no longer fear once machines take the wheel. In fact, the RAND Corporation insists that self-driving systems might save tens of thousands of lives a year once they take to the roads. That’s not a number to be taken lightly, and it’s clear why there’s a lot of buzz around this tech. But recent accidents involving self-driving cars have raised a lot of concerns and questions.

We’re right to have concerns about self-driving car safety

Self-driving systems started building momentum with Google’s Waymo, and later with Tesla and Uber. Back in 2009, Google started working on its autonomous car, and within just a few years, its self-driving vehicles drove a collective 300,000 miles. By the end of last year, Google announced its cars managed to mark 4 million miles. And Uber has been testing its systems in the real world, too. It recently spun the odometer up to a total of 2 million miles.

But while the figures might impress you, to get an accurate assessment of the safety of autonomous cars, we need way more miles. For instance, in 2016, Americans drove nearly 3.2 trillion miles, leading to 1.18 fatalities for every 100 million miles driven. Those avoidable deaths really add up, and that’s part of the reason we want a safer alternative. But to judge how safe driverless cars might be, they’d need to drive a significant fraction of that, just for an apples-to-apples comparison.

Early tests are pretty positive for automated safety systems, but after a car accident involving a Tesla Model S electric sedan in which a driver lost his life, the public perception really soured. The National Highway Traffic Safety Administration’s (NHTSA) statement pointed out that preliminary reports showed that the car failed to activate the brakes because it didn’t ‘see’ another vehicle.

That’s not exactly reassuring. Moreover, officials note that Tesla’s “Autopilot could be used on roads for which it wasn’t designed, and that a hands-on-the-wheel detection system was a poor substitute for measuring driver alertness.” In this case, the driver relied on the technology too much, the safety board found. And they revealed that the truck driver involved in the accident failed to “give an adequate safety margin before starting the turn”. In the end, it turned out that causes of the accident are manifold, involving the misuse of technology, the Tesla driver’s poor alertness, and inadequate signalling by the truck driver.

Is this a failure of the system or the human operator? It’s not entirely clear, is it?

The Uber accident offers more reasons for worry – or does it?

But the latest Uber accident resulting in a pedestrian death may push the pause button on self-driving systems. It’s clear we need to rethink safety, but a careful analysis of this tragedy doesn’t reveal a clear problem – yet again.

The accident occurred on Mill Avenue and Curry Road, late on Sunday in Tempe, Arizona, in the evening. This Uber vehicle, though in self-driving mode, had a safety driver at the wheel. The car was travelling 38 mph in a posted 45 mph zone, when a pedestrian crossing the street was struck and killed. According to Bloomberg Technology, “Elaine Herzberg, 49, was walking outside of a crosswalk at the time she was struck by the Uber vehicle.” Tempe’s Police Chief, Sylvia Moir, said that, based on the facts that it was dark, and that the pedestrian didn’t cross the lane where marked, the accident would have been difficult to avoid no matter who (or what) was driving.

And no one is taking this failure lightly.

Anthony Foxx, who occupied the position of US Secretary of Transportation under former President Barack Obama, described the accident as a “wake up call to the entire [autonomous vehicle] industry and government to put a high priority on safety”. And he’s not alone in calling out for stricter road regulations. John M. Simpson, a privacy and technology project director with Consumer Watchdog, noted that “The robot cars cannot accurately predict human behavior, and the real problem comes in the interaction between humans and the robot vehicles.” That’s pretty clearly true.

Rethinking the basics – better safe than sorry

MIT Technology Review reported that both the NHTSA and the National Transportation Safety Board (NTSB) are “said to have launched probes” into what happened and what can be done in the future. Subbarao Kambhampati, a professor at Arizona State University who specialises in AI, noted that the Uber accident questions to what extent safety drivers are able to monitor systems more effectively than the machines they’re augmenting. That’s a good question, especially given inattentiveness and fatigue.

Of course, that’s a problem for all drivers, and one approach is to help us drive better. Bryan Reimer, a research scientist at MIT, showed that a lot can be achieved “with a system that estimates a driver’s mental workload and attentiveness by using sensors on the dashboard to measure heart rate, skin conductance, and eye movement”. This setup would provide “a kind of adaptive automation”, where the vehicle “would make more or less use of its autonomous features depending on the driver’s level of distraction or engagement”. That’s a promising approach, but it still doesn’t answer the basic self-driving safety questions.

Tesla’s paying attention to these concerns, and it’s working on its self-driving technology, “The upcoming autonomous coast-to-coast drive will showcase a major leap forward for our self-driving technology,” they said. “Additionally, an extensive overhaul of the underlying architecture of our software has now been completed, which has enabled a step-change improvement in the collection and analysis of data and fundamentally enhanced its machine learning capabilities.” In essence, the hope is that the new version of Autopilot will outperform the older, making the system operate more smoothly and with greater safety.

Take a look:

We’re not sure that either of these deaths were really the fault of the tech. In both cases, human drivers were ultimately in control, and in both, they failed to prevent the accident, too. But that’s cold comfort to those affected by this loss of life, and it’s clear that the industry and regulators need to take a step back and rethink basic safety.