- A small Catholic school installs a sophisticated security system equipped with facial recognition software

- Avigilon is AI-based surveillance software that can flag students as threats

- AI tools allow schools to monitor everything their students write on school computers

- Is surveillance technology making schools safer?

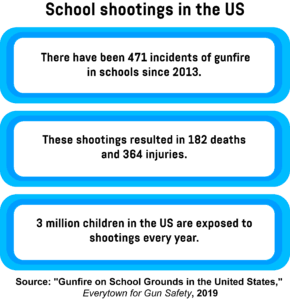

School shootings have become such a common occurrence, in the United States at least, that hardly a week goes by without us hearing about a new one, often with deadly consequences. According to Everytown for Gun Safety, an independent organisation dedicated to understanding and reducing gun violence in America, there have been at least 471 incidents of gunfire on school grounds in the United States since 2013, resulting in 182 deaths and 364 injuries.

Consequently, many teachers and students feel that going to school puts them in danger, and the sentiment is shared by parents as well. The 2018 PDK Poll of the Public’s Attitudes Toward the Public Schools reveals that 34 per cent of parents in 2018 feared for their children’s safety in school, compared to just 12 per cent in 2013. Furthermore, witnessing a shooting can have a devastating impact on a child’s wellbeing, often leading to depression, anxiety, posttraumatic stress disorder, alcohol and drug abuse, and even criminal activity.

As a response to the growing number of deadly shootings, schools across the US are increasingly implementing surveillance systems, access control systems, and facial recognition technology in an attempt to prevent future incidents and protect their students and staff. According to a recent report published by the market research firm IHS Markit, the education sector’s spending on security equipment and services reached $2.7 billion in 2017, and this number is expected to continue to grow over the coming years.

A small Catholic school installs a sophisticated security system equipped with facial recognition software

Located in a quiet residential neighbourhood in Seattle, the St. Therese Catholic Academy is a small pre-K-8 school that has just over 150 students and 30 staff. Prior to the recent school shootings in Parkland and Santa Fe, the school’s security system consisted of a small camera at the entrance that enabled the office staff to determine whether someone should be allowed into the school building. However, after those two tragic events, the school decided to upgrade its security system and install several high-resolution cameras equipped with facial recognition software.

SAFR (Secure, Accurate Facial Recognition) is AI-powered facial recognition software developed by the Seattle-based software company RealNetworks, which the company decided to share for free with all K-12 schools in the United States. However, even though the software was free, St. Therese first had to upgrade its infrastructure to be able to use it, which involved installing new security cameras, upgrading the school’s Wi-Fi, and purchasing a few Apple devices. SAFR was officially put to use at the beginning of the 2018/2019 school year. All staff were registered in the system, and parents may soon join them as well. The school is also considering adding regular outside visitors to the database, such as the lunch caterer, the milk delivery person, and the mail carrier.

To gain access into the building, authorised users just have to look up and smile at the cameras placed at two of the five entryways, while those not in the system require individual approval. “We don’t want to think about safety and security, but we kind of have to,” says Matt DeBoer, the principal. “This technology has taken that worry and thinking away from classroom teachers to free them up to teach.” About a dozen other schools and districts have since introduced SAFR, while hundreds more have expressed interest in doing so.

Avigilon is an AI-based surveillance software that can flag students as threats

Some schools are using AI-based surveillance systems to do more than just monitor who comes and goes from the building; they’re also using them to identify potential threats. The South Florida school system recently announced plans to install experimental surveillance software called Avigilon, which would enable security officials to track students based on their appearance. Once it detects a person in a place where they’re not supposed to be or some other unusual event, the software automatically alerts a school monitoring officer, who can then bring up video of where that person has been recorded by the system’s 145 cameras installed all over the campus.

Avigilon doesn’t use facial recognition technology. Instead, it uses a feature called ‘appearance search’ that allows security officials to find or flag people based on what they’re wearing. In a way, this feature makes the technology even more powerful than facial recognition, because it can track people as long as the camera can see their bodies. The developers used millions of images to teach the software how people look and move. The result is that Avigilon now has the ability to recognise students from afar by analysing the shape of their body, their facial attributes, their hairstyles, and the look and color of their clothes. The images are then compared against other images captured by the system and used to create an accurate timeline of that person’s movement within seconds.

“Schools today are monitored by someone sitting in a communications center, looking at a video wall, when the attention span of the average human looking at a single camera and being able to detect events that are useful is about 20 minutes,” says Mahesh Saptharishi, the chief technology officer at Motorola Solutions, which owns Avigilon. “But when something bad is happening … you need to be able in a matter of seconds to figure out where that person is right now.”

AI tools allow schools to monitor everything their students write on school computers

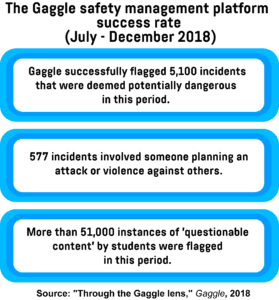

There are also other ways to detect potential threats. Some schools are implementing Safety Management Platforms (SMPs) like Gaggle, Securly, or GoGuardian, which use natural language processing to scan millions of words students type on school computers and flag those that might indicate bullying or self-harm behavior. Gaggle, for example, charges around $5 per student annually and acts as a filter on top of tools like Google Docs and Gmail, automatically scanning all of the files, messages, and class assignments students create and store using devices and accounts issued by the school. Once its machine learning algorithm detects a word or phrase that raises flags, such as mention of drugs or signs of cyberbullying, it’s first sent to human reviewers for analysis, who then decide whether the school should be alerted. According to the company, Gaggle successfully flagged 5,100 potential incidents in the period between July and December of 2018, 577 of which turned out to involve someone planning an attack or violence against others.

Securly takes this even one step further. In addition to monitoring what students write in tools like Google Docs, schools can also use this software to perform sentiment analysis of their public posts on Facebook, Twitter, and other social media. Furthermore, the software also features an emotionally intelligent app that sends parents weekly reports that include their children’s internet searches and browsing histories. Securly’s CEO, Vinay Mahadik, believes that tools such as this allow schools to find the right balance between freedom and supervision. “Not everybody is happy because we are talking about monitoring kids,” says Mahadik. “But as a whole, everyone agrees there has to be a solution for keeping them safe. That’s the fine line we’re walking.”

Is surveillance technology making schools safer?

Unsurprisingly, many have voiced concerns about the proliferation of surveillance technologies in schools, especially regarding student privacy. “A good-faith effort to monitor students keeps raising the bar until you have a sort of surveillance state in the classroom,” says Girard Kelly, the director of privacy review at Common Sense Media, a non-profit that promotes internet safety education for children. “Not only are there metal detectors and cameras in the schools, but now their learning objectives and emails are being tracked too.”

The use of facial recognition technology in school environments has proven particularly troublesome. Images captured by facial recognition software are usually processed in the cloud, which raises questions about how long they’re stored, how they’re used, and who has access to them. Furthermore, this technology has been shown to generate a large number of false positives and negatives, bringing its accuracy into question. There are also fears that digital surveillance could have a negative impact on students’ freedom of expression and prevent them from speaking their mind.

As gun violence becomes a regular feature of school life in the United States, schools are increasingly turning to various surveillance technologies in an attempt to protect their students and prevent further incidents from happening. From facial recognition software that allows schools to monitor who comes and goes to AI-powered software that can track students based on what they’re wearing to AI tools that allow schools to keep track of everything students write on their school-issued computers, surveillance technologies are changing the way children experience education. Schools have a duty to protect their students, which means that we might just have to get used to the idea of surveillance in a school environment. We’ll just have to find a way to ensure that safety doesn’t come at the expense of privacy.

Share via: