- Investors and entrepreneurs are jumping into the AI-chip space

- From chips smaller than a fingernail to… iPad-sized AI chips?

- New chip processes neural networks millions of times faster than typical computer

- Tesla AI chip technology to bring about self-driving revolution

- Chinese and European AI chipmakers enter the race

- The development race for AI chips is bound to intensify

Artificial intelligence (AI) technologies have transformed the world in the past decade. From voice recognition and biometrics to stock market trading and social media, smart algorithms are now critical assets in a wide range of industries. But running AI software comes at a cost. Analysing data and finding patterns requires huge processing power, which is why much of today’s AI computations take place in data centres before being delivered to various devices. For algorithms to fulfil their potential, however, they need to run on the device itself, beyond the reach of information processing facilities. The key to these efforts lies in chips that enable machines and data centres to run increasingly powerful programs.

Until now, AI software has mostly run on graphics processing units (GPUs), providing companies with the computing power needed to train algorithms for various tasks. But there’s only so much this approach can deliver. That’s why the tech industry has started building AI chips – specialised computer processors that contain software and hardware components specifically designed to accelerate AI computations. Not only do these new chips make data centres faster, they also enable self-driving vehicles, phones, drones, robots, and other machines to independently process complex code and perform better.

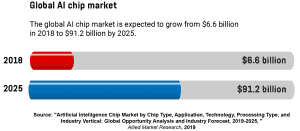

That’s why the global AI chip market is booming and is projected to reach a value of $91.18 billion by 2025. Global financial and tax consulting firm Deloitte predicts that over 750 million AI chips will be sold throughout the world in 2020 alone. From startups and universities to car companies and tech giants, a diverse range of actors are racing to develop powerful processors.

Investors and entrepreneurs are jumping into the AI-chip space

The potential of new chips to improve AI processes has attracted numerous investors and entrepreneurs. In the period from 2015 to 2018, for instance, US venture capitalists invested over $1 billion in AI chip startups. Larger players are acquiring smaller startups, too. For instance, Intel, the world’s largest semiconductor chip manufacturer, purchased the Israeli AI chipmaker Habana Labs for around $2 billion in December 2019. Mike Delmer, a microchip analyst at The Linley Group, an analyst firm, says that “It’s impossible to keep track of all the companies jumping into the AI-chip space. I’m not joking that we learn about a new one nearly every week.”

Tech corporations like Nvidia and Google have already developed AI chips customised for various AI applications. Apple is also equipping iPhones with AI chips, while China’s Huawei upgraded its Kirin smartphone chips to more effectively deal with AI-related tasks. Governments are also becoming increasingly interested in this thriving industry. The Defense Advanced Research Projects Agency (DARPA), which is in charge of developing military-related emerging technologies for the US government, is funding several AI chip projects, while Chinese authorities are also supporting companies working in this field. The industry is experiencing rapid growth, and it will be interesting to see whose chips will eventually dominate the market.

From chips smaller than a fingernail to… iPad-sized AI chips?

Computer chips are usually tiny. Many are even smaller than a fingernail, which is why the news that the US-based startup Cerebras developed an iPad-sized chip came as a surprise to many. A 22-cm square, it’s likely the largest computer chip ever built. The device “can do the work of a cluster of hundreds of GPUs, depending on the task at hand, while consuming much less energy and space”, says Cerebras founder and CEO Andrew Feldman. Also, data moves around the chip 1,000 times faster than between separate chips linked in a cluster.

But making a large chip comes with unique challenges. Most computer chips can be air-cooled, but the one designed by Cerebras requires a cool water pipe system to prevent it from overheating. Another challenge is the production process itself. The huge chip is manufactured by TSMC, a Taiwan-based contract chipmaker, which had to substantially adapt its facility and equipment to adjust to the scale of this project.

Clearly, Cerebras’ product is unlikely to end up in smartphones or tablets. Instead, the company plans to sell servers built around the chip to clients that want to take their AI projects to the next level. It’s already collaborating with other companies on some drug design projects, and big tech firms like Facebook, Amazon, and Microsoft might also be interested in using the new chip, as it would enable them to create smarter algorithms more quickly. Such service “will be expensive, but some people will probably use it”, says Eugenio Culurciello, a hardware design expert at US-based chipmaker Micron.

New chip processes massive neural networks millions of times faster than typical computers

Experts at MIT have taken a different approach to building AI chips. The team, consisting of Dirk Englund, Alexander Sludds, and several other researchers, is developing a chip that uses light instead of electricity to run AI computations, drastically reducing energy consumption. Initial simulations indicated that the chip could process massive neural networks millions of times faster than its electrical counterparts or typical computers. This could be especially useful in reducing the energy consumption of data centres around the world. The researchers are now building a prototype chip to experimentally prove the potential of this technology.

Vivienne Sze is another MIT researcher actively working in the AI chip industry. Together with Joel Emer, a research scientist at Nvidia and a professor at MIT, she developed a chip called Eyeriss. During several tests, the chip substantially outperformed standard processors by 10 or even 1,000 times, depending on which algorithm it powered. Sze even got to present her design at MARS, a yearly event hosted by Amazon founder and CEO Jeff Bezos. The gathering brings together the most innovative minds from the AI, automation, robotics, and space industries. In addition to Eyeriss, Sze also took part in the development of a low-power AI chip called Navion, used in small drones for mapping and navigation purposes.

Tesla AI chip technology to bring about self-driving revolution

Smart algorithms are vital for the deployment of self-driving vehicles. They analyse camera and sensory data and navigate cars and trucks on busy roads. For that to happen, however, vehicles have to be equipped with powerful computers. US-based electric car maker Tesla claims its full self-driving (FSD) computer is up to that task. What makes the FSD unit particularly efficient is two AI chips designed by Tesla and manufactured by South Korean electronics giant Samsung. A single chip can perform up to 72 trillion operations per second, and the system as a whole can analyse 2,100 frames of video each second. The carmaker explains that such performance makes it “21 times faster than previous-generation hardware”.

Eight cameras, 12 ultrasonic sensors, a front-facing radar, and GPS feed the chips with huge amounts of information. The AI then analyses this data stream to decide whether the vehicle should brake, accelerate, or turn. Tesla’s CEO, Elon Musk, is sure that the new chips will bring about the self-driving revolution and put over a million fully autonomous cars on the road in 2020. But analysts warn that the famed entrepreneur might be too confident. Mike Demler, a microprocessor expert and analyst at The Linley Group, says that “On pure technical grounds, [Tesla has built] a significant chip. It’s just not the best thing since sliced bread, as Musk claims”.

Chinese and European AI chipmakers enter the race

US companies might have a lead in the AI chip market, but other regions are catching up as well. In China, e-commerce and tech giant Alibaba has developed a special chip called the Hanguang 800 that powers a number of AI processes. Instead of hours, some computing tasks are now completed in less than five minutes. The new technology won’t be sold to third parties as a standalone product, but rather be used internally in operations related to product search, automatic translation on e-commerce sites, personalised recommendations, and advertising. In the future, the company might provide access to the Hanguang chips through its cloud computing unit.

In Europe, the UK-based startup Graphcore has raised $150 million in funding to expand its research and sales operations. The company is valued at $1.95 billion and already works with a range of clients, including European search engine company Qwant and investment management firm Carmot Capital. Graphcore’s chips are called Intelligence Processing Units (IPUs) and come with corresponding Poplar software. Also, the IPUs are available “for external customers on the Azure Cloud, as well as for use by Microsoft internal AI initiatives”, says Nigel Toon, the company’s founder and CEO.

The development race for AI chips is bound to intensify

From smartphones and medical tools to autonomous vehicles and e-commerce, AI technologies will be applied to many different areas. They will enable companies to make important discoveries and develop vital products. But increasingly complex algorithms require hardware with huge computing capacity, leading to a growing interest in the development of better computer processors – powered by AI. They’re vital for the future of many industries, which is why the race to develop advanced AI chips is expected to intensify.

Share via: