- AI to improve administrative efficiency in courtrooms

- Could AI replace human judges?

- AI in a supporting role in judicial systems

- UNESCO launches a course on AI and the Rule of Law

Artificial Intelligence (AI) is increasingly being seen implemented in various fields for all kinds of different processes. Although concerns have been raised about AI’s potential to infringe human rights, it also offers opportunities to reduce human errors and flaws such as discrimination and bias (conscious or unconscious). One area which demonstrates AI’s advantages and challenges is the justice system. Judicial systems are increasingly adopting AI to assist with tasks like streamlining administrative processes, analysing data to find legal precedents, and even making predictions and key decisions in cases.

AI to improve administrative efficiency in courtrooms

In addition to judicial responsibilities, judges must also complete administrative work. AI tools can take over some of these administrative duties, leaving judges with more time and energy to spend on tasks where human input is more beneficial. A report by the Vidhi Centre for Legal Policy, an independent think-tank, found that “task-specific narrow AI tools” can ease administrative workload by carrying out tasks like filtering, prioritising, and tracking cases, providing notifications on developments, and electronic filing. The number of files, records, and notes involved in each legal case can be significant, and AI programmes can analyse and categorise these more efficiently than human workers can. They can also be used by lawyers to automatically simplify legal ‘jargon’ to make it decipherable for clients.

A leading law firm in India has been using a machine learning programme called ‘Kira’, developed by Canadian technology firm Kira Systems. Kira can carry out numerous tasks simultaneously, such as analysing documents to identify critical information. Other Indian law firms use a similar AI platform called ‘Mitra’, which has proven useful in the areas of case management and due diligence. Despite this promising start, there is still room for this technology to develop. According to Nikhil Narendran from law firm Trilegal, “most systems are rudimentary and require years of operations to mature as reliable technologies.” This is typical of machine learning – the algorithms are designed to improve in accuracy over time.

Despite the promise of AI in increasing administrative efficiency, the Vidhi Centre for Legal Policy report does flag the potential issue of judges becoming overly reliant on AI and making less use of their own intuition.

Due diligence processes can currently take months to complete, but fact-checking records can be carried out more quickly and accurately by algorithms than by humans. Divorce consultancy firm Wevorce has developed a self-guided, AI-based solution to categorise clients’ cases and assign them quickly to legal experts. The average divorce in the US takes a year to reach a settlement and costs $15,000. Automating these processes with AI could significantly reduce this time and these expenses. A paper by the Vidhi Centre for Legal Policy affirms that AI can significantly boost the efficiency of administrative and research tasks. The Supreme Court of India is even developing an AI tool called SUPACE (Supreme Court Portal for Assistance in Court Efficiency) to assist with legal research, data analysis, and other essential processes. Despite these developments, and the promise of AI in increasing administrative efficiency, the Vidhi Centre for Legal Policy report does flag the potential issue of judges becoming overly reliant on AI and making less use of their own intuition in cases. For AI-based systems to be most effective, they must work alongside human expertise, rather than being used as a replacement.

Could AI replace human judges?

‘Predictive justice’ is an emerging trend in judicial systems. This is where machine learning algorithms predict the probability of certain events having happened. These algorithms analyse data relating to legal disputes, cross-reference it with information from databases of past decisions and case law precedents, and develop models that make predictions. Over time, the machine learning software becomes increasingly accurate. Of course, these solutions would have to be used and optimised extensively before they could be sufficiently insightful. There is also the potential issue of the accuracy of the initial data itself – an AI system can only ever be as accurate as the data used to train and program it. If that data reflects human errors or biases, then the AI itself will likely reproduce these biases and reinforce inequalities.

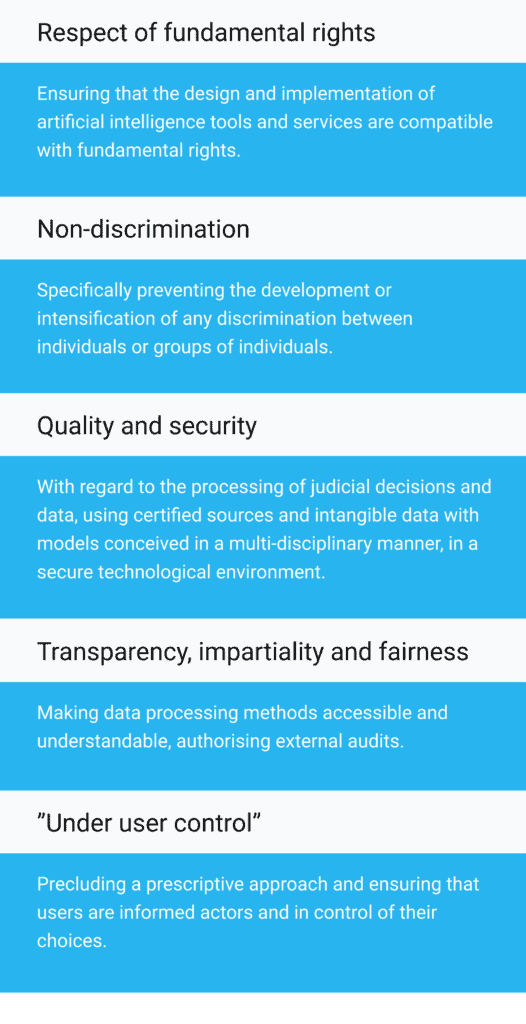

This is one of the objections that some AI experts have raised with the idea of AI systems being given central decision-making roles in the justice system. The concept of a fully AI-based judge that is immutable and sufficiently unbiased is currently a long way away, if at all possible. Inaccuracies in judicial systems raise all sorts of ethical concerns, and could open courtrooms and judiciaries up to further lawsuits if bias or inaccuracy was suspected. Handing over too much decision-making power to AI systems before they are sufficiently advanced could in fact be counter-productive. The Vidhi Centre for Legal Policy recommends that any use of AI in judicial systems must be preceded by comprehensive legal and ethical frameworks to prevent these issues. A prime example of such a framework is the European ethical Charter adopted in 2018 by the European Commission for the Efficiency of Justice (CEPEJ). This framework was developed to guide and advise on the application of AI in the justice system. The following core principles were identified:

The CEPEJ believes that AI can benefit justice systems, but that it must be implemented responsibly and with respect for fundamental human rights. The Charter also outlines best practices for ensuring that processes are carried out this way. Close collaboration with human legal professionals and researchers is vital for the success of these systems.

The issues of bias and discrimination are key reasons why AI must be used – at least for the foreseeable future – as a tool by justice systems, rather than being given full control of those systems.

AI in a supporting role in judicial systems

The gradual adoption of AI into judicial systems can be compared to the introduction of IT. In both respects, governance (the how, who, when, where, and why of decision making) of judiciaries is the key factor of importance. IT posed both opportunities and challenges for judiciaries. An example of this is the Netherlands Judiciary, which uses various forms of IT, including court management systems, digital procedures, and electronic filing. To ensure that IT stayed under the control of the judiciary, rather than the other way around, the judiciary installed an in-house IT development organisation. In-house development has the advantage of coming from a perspective of direct experience with judicial procedures, requirements, and challenges. If IT systems are trusted with carrying out essential processes, they must be developed and programmed by judiciaries themselves. In the case of the Netherlands Judiciary, this was achieved by bringing contracted software developers in to work alongside in-house professionals with experience of that workplace. This enabled IT development to take place under the governance of the judiciary.

The issues of bias and discrimination are key reasons why AI must be used – at least for the foreseeable future – as a tool by justice systems, rather than being given full control of those systems. Technology exists, and is currently used by some US jurisdictions, that can calculate the risks of recidivism and assign a score to incarcerated individuals to mark their probability of reoffending. Although these algorithms analyse a vast and ever-growing amount of past case-related data, there are questions of how analysis takes place, and who is accountable for decisions made. US-based non-profit organisation ProPublica studied the accuracy of a piece of software called COMPAS (Correctional Offender Management Profiling for Alternative Sanctions) used in Florida to predict the probability of recidivism. The study found that “black defendants were far more likely than white defendants to be incorrectly judged to be at a higher risk of recidivism, while white defendants were more likely than black defendants to be incorrectly flagged as low risk.” This not only proves the need for accountability in the use of AI, but also further displays the dangers of supplying algorithms with biased data. Even the strongest machine learning algorithms with the greatest capacity for change will be limited by the validity of the data that they are initially presented with. Until such issues have been resolved, AI will likely be limited to a supporting role in justice systems.

While IT is now a staple part of justice systems all over the world, most countries have governance structures that ultimately allow human judges to make key decisions and overrule automated systems. This is also essential for the use of AI systems, at least at their currently incomplete stage of development. Nowadays, IT is used extensively alongside human workers in judicial systems, and its challenges have been overcome or mitigated while its benefits have been optimised. The same is likely to happen with AI – new technologies rarely disappear, and must instead be used for the best.

UNESCO launches a course on AI and the Rule of Law

With the support of UNESCO and the Open Society Foundation, the IEEE (Institute of Electrical and Electronics Engineers) has launched a free, global MOOC (Massive Open Online Course) on AI and the Rule of Law. At the time of writing, almost 4000 judicial operators have registered to take part in the course, which was developed with assistance and input from experts including Supreme Court and Human Rights Court judges. The course aims to address the issues of how AI can improve the administrative processes of judiciaries, and how discrimination and bias can be addressed. The course is available in seven languages, and is comprised of six modules:

- An Introduction to Why Digital Transformation and AI Matter for Justice Systems

- AI Adoption Across Justice Systems

- The Rise of Online Courts

- Algorithmic Bias and its Implications for Judicial Decision Making

- Safeguarding Human Rights in the Age of AI

- AI Ethics & Governance Concerning Judicial Operators

Closing thoughts

Accurate decision making is particularly essential in the justice system, which is a fundamental aspect of both democracy and liberty. Issues such as discrimination, bias, and lack of transparency are often cited by those concerned about the use of AI – yet these issues can already be found widely in human-based justice systems. Although the decision-making processes of algorithms can be difficult to decipher, the same is true of understanding the mental processes used by human judges and other professionals. Neither the human brain nor a machine programmed by one can be confidently said to be free of error. In fact, issues such as human biases towards more charismatic speakers in a courtroom, and institutional biases towards those with more financial resources and societal power, could potentially be mitigated by AI playing an increasingly important role in decision-making.

AI systems must help overcome, rather than add to, the existing issues. This requires the creation of effective frameworks with which to program and develop algorithms. Like any technological advance, AI has existing limitations. However, it can provide significant opportunities for improving justice systems if implemented correctly, responsibly, and with the principles of ethics and human rights. Initiatives such as CEPEJ’s Charter and UNESCO’s MOOC can help ensure that AI is used in accordance with rules of law, justice, and equality. AI should also be used – at least for the time being – as an assistant to human workers in the justice system, rather than as a replacement. If these principles are followed and technological advances in AI continue, it could have wide-ranging and positive effects.

Share via: