- Terrorists are adapting to the digital age

- Where do terrorists go once banned from Facebook or Twitter?

- Extremists find refuge in emerging apps

- Video games have become avenues of recruitment

- The dark web enables terrorists to disappear

- The failure of big tech companies to tackle terrorist content

- What can governments and tech platforms do to solve the problem?

- The need for globally coordinated policies

The rise of the internet has made it easier for people to talk to each other. Social media platforms and messaging apps have enabled millions of users to connect and have their voices heard. But far from leading to a safer environment, the ease of communication turned the online world into a breeding ground for various extremists. From ISIS terrorists to neo-Nazis, digital media enables nefarious actors to spread their message, attract new followers, and organise attacks. Terrorist groups require spectacles and violence to survive, and the security expert Samir Saran suggests that “technology has allowed this opera of violence to find new audiences and locales.” And although tech companies and governments are aware of the new threat, finding an effective and sustainable solution has proved to be a daunting task.

Terrorists are adapting to the digital age

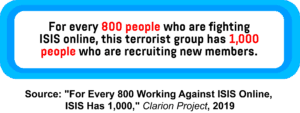

The nature of modern security threats is changing. Terrorists have moved much of their operations online, having recognised the effectiveness of the internet in reaching a wider audience. In 2016, for instance, ISIS granted its ‘digital squads’ the same rank as those fighting on the ground. And for every 800 people who fight ISIS online, the group has 1,000 people working on recruitment and producing various types of content. Terrorists make propaganda videos by, for instance, attaching a GoPro camera to a home-made armed drone in Syria or urging cells in other countries to make 4K videos pledging allegiance to the ISIS leader Abu Bakr al-Baghdadi and carrying out violent attacks in his name. This footage is then published on social media and various websites.

Other terror groups use similar tactics. Neo-Nazis and white nationalists, for example, post various treaties, tracts, and manifestos on the web, hoping to lure more people into the ideology of racial, ethnic, and religious hatred. In fact, there’s so much extremist material available online “that would have been extraordinarily difficult to get hold of 25 years ago”, says Brian Levin, the director of the Center for the Study of Hate and Extremism. Online websites such as 8chan have become a bastion of hate speech. And the gunman accused of killing 51 people in two mosques in Christchurch, New Zealand, even live-streamed the terrorist attack on his Facebook account and shared the link on 8chan.

Terrorism expert Nikita Malik explains that around 75 per cent of far-right group members use Facebook to disseminate their content, with the rest ending up on Twitter. Islamist material, however, is shared across many more platforms, including Facebook, YouTube, Twitter, Instagram, and various encrypted apps. The convergence of technology and terrorism caught many tech giants unprepared. Initially, they refused to censor this content, arguing that such a delicate task can’t be entrusted to private businesses. But after reversing this decision, social media companies aggressively purged violent users and tried to prevent terrorist propaganda from spreading. But once banned from using social media platforms, terrorists still had many alternative options to choose from.

Where do terrorists go once banned from Facebook or Twitter?

The first choice would be to simply create new accounts. Doing that, however, usually requires using a mobile phone number for verification purposes, which was an issue that some terrorist cells solved almost on an industrial scale. For instance, Rabar Mala, a UK citizen sentenced to eight years in prison, used 360 SIM cards to send phone numbers to extremists in other countries, so they can open new social media and messaging accounts.

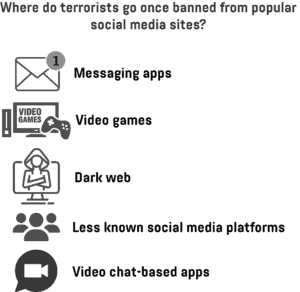

Terrorists banned from Facebook or Twitter can also turn to alternative channels, such as the encrypted messaging app Telegram and the video chat room service Paltalk. Also, some extremists use a social media platform called VKontakte, which is especially popular among Russian-speaking users and has lax security protocols for creating an account. But as even these platforms crack down on extremist content, terrorist groups have moved to emerging messaging apps, many of which offer features such as chat groups and media-sharing.

Extremists find refuge in emerging apps

One such app is RocketChat, an open-source messaging service geared towards business users. It offers both mobile and desktop versions, and it boasts around 10 million users. Amaq News Agency, ISIS’ official news outlet, was the first to create an account on the app, instructing followers to do the same. The English language ISIS media outlet Halummu and the discussion forum Shumukh al-Islam also opened accounts on RocketChat, uploading content from their main Telegram channels. These groups continue to grow their member base on the platform and currently have more than 700 users in several channels.

The ISIS-linked propaganda also appeared on a new social media platform called Koonekti. It was spread by an account linked to the Nashir News Agency, a part of the ISIS media apparatus spreading terrorist content. The page has since been removed from Koonekti and hasn’t reappeared. Jihadism specialist Abdirahim Saeed says that ISIS also experimented with a social media platform called GoLike, as well as many other small- to medium-sized apps and services, such as Viber, Discord, Kik, Baaz, Ask.fm, and even micro-platforms run by a single individual.

But terrorists are looking to exploit much more sophisticated technologies, such as decentralised applications (DApps). These blockchain-based apps enable individuals to communicate without a middleman, making the tracking and banning of illegal content much more difficult. ISIS supporters have already been experimenting with decentralised services such as Riot and ZeroNet and implementing these solutions on a larger scale could enable extremists and criminals to communicate through secure digital channels.

Video games have become avenues of recruitment

Banned from social media platforms, extremist groups are coming up with ever more creative ways of attracting young recruits. One of the most recent avenues for these efforts is online gaming, enjoyed by millions of people worldwide. Video games enable various groups such as neo-Nazis and ISIS to contact potential recruits and spread propaganda. Officials in the United Arab Emirates, for example, reported that terrorists recruited young Emiratis using online games. The US National Security Agency (NSA) is also concerned with these efforts and has been monitoring extremists using games such as World of Warcraft and Second life.

Steam, a digital video game distribution platform developed by Valve Corporation, is also being used to spread hate speech. Thousands of users are part of community pages that glorify school shootings and support neo-Nazi and white supremacist groups. And extremists are also exploiting Discord, a messaging app for gamers with around 130 million users. There are multiple chat groups with pro-ISIS names like “Al Bagdadi” that post ISIS content and the group’s commentary about future operations. By infesting gaming communities with hateful content, terrorist groups are attracting and recruiting new members.

The dark web enables terrorists to disappear

And as it becomes increasingly difficult to maintain a presence on the traditional web, terrorists are moving to the dark web, a group of websites that aren’t indexed by search engines and can only be accessed via special browsers like Tor. Researchers have discovered that the so-called Electronic Horizon Foundation, a group that maintains the web security of ISIS and its supporters, maintains a dark web website where it spreads propaganda content and suggests how to preserve online anonymity. Security institutions are well aware of similar threats and the danger of terrorists and criminals disappearing on the web. Hiding in the online world, nefarious actors can recruit fighters and plan real-world attacks, unhindered by law enforcement agencies.

The failure of big tech companies to tackle terrorist content

Clearly, extremists have various options to fall back to once banned from major social media platforms. And tech giants such as Facebook and Google argue that they’ve almost solved the problem of terrorists using digital media to recruit new members. Human moderators and machine learning algorithms are allegedly capable of rooting out unwanted content at an unprecedented rate. But such bold claims often don’t reflect reality. In a study conducted as a part of the Counter Extremism Project, researchers found out that multiple ISIS videos were successfully uploaded to YouTube in 2018, garnering thousands of views and remaining undetected for hours.

What’s more, Facebook, Twitter, and other online platforms are notoriously ineffective at spotting hateful content in Arabic. Books such as The Management of Savagery could easily be found online, despite being well-known terrorist material. And extremists are aware that “Arabic, in addition to considering it a sacred language, provides a linguistic firewall which their adversaries find difficult to penetrate.” Terrorists even try to outsmart Facebook’s algorithms. For instance, they classify their pages as ‘educational’ and tag terrorist material as ‘books’ or ‘public figures’. And as algorithms are less likely to take down what they perceive as educational content, terror-related groups can remain undetected to human reviewers for a long time.

What can governments and tech platforms do to solve the problem?

As terrorists continue their online and real-world rampage, politicians are trying to stop social media from spreading terrorism. New Zealand’s prime minister Jacinda Ardern and the French president Emmanuel Macron are just a few of many state leaders who advocate a global solution to the convergence of terrorism and technology. Creating an effective policy and cybersecurity response to this problem is challenging, although projects such as PROTON might help. This EU-funded project aims makes it easier for governments to examine the effects of different policy options on curbing the recruitment efforts of terrorists and criminals by combining computer science and social sciences.

Bertie Vidgen, a researcher at the Alan Turing Institute at the University of Oxford, argues that social media platforms can also adopt new measures to more effectively remove extremist content in the wake of terrorist attacks. One way to do it would be to make hate detection tools stricter, allowing them to prevent more content from being uploaded. Also, content moderators should be allowed to work faster and without the fear of low performance evaluation in periods when they tackle an especially large influx of hateful material. And by limiting the ability of users to share videos or pictures or create shared databases of content, social media giants could curb the spread of propaganda and automate the removal of material already flagged by other platforms.

The need for globally coordinated policies

Terrorists have turned to the internet and digital media to recruit new members. By spreading hateful content and inciting violence towards ethnic and religious minorities, terror groups such as ISIS, white supremacists, and neo-Nazis are making the world a more dangerous place. Tech giants are pushing back and developing new algorithms to remove hateful content. Governments are concerned as well and security agencies are hunting down terrorists to prevent attacks. These efforts need to be continued and scaled further, while many countries are going beyond that and are trying to address the social injustice that makes some people turn to violence. And although there’s no easy solution to this problem, global and coordinated policies of governments and companies can be effective in preventing more people from resorting to violence.

Share via: